Introduction to Databricks

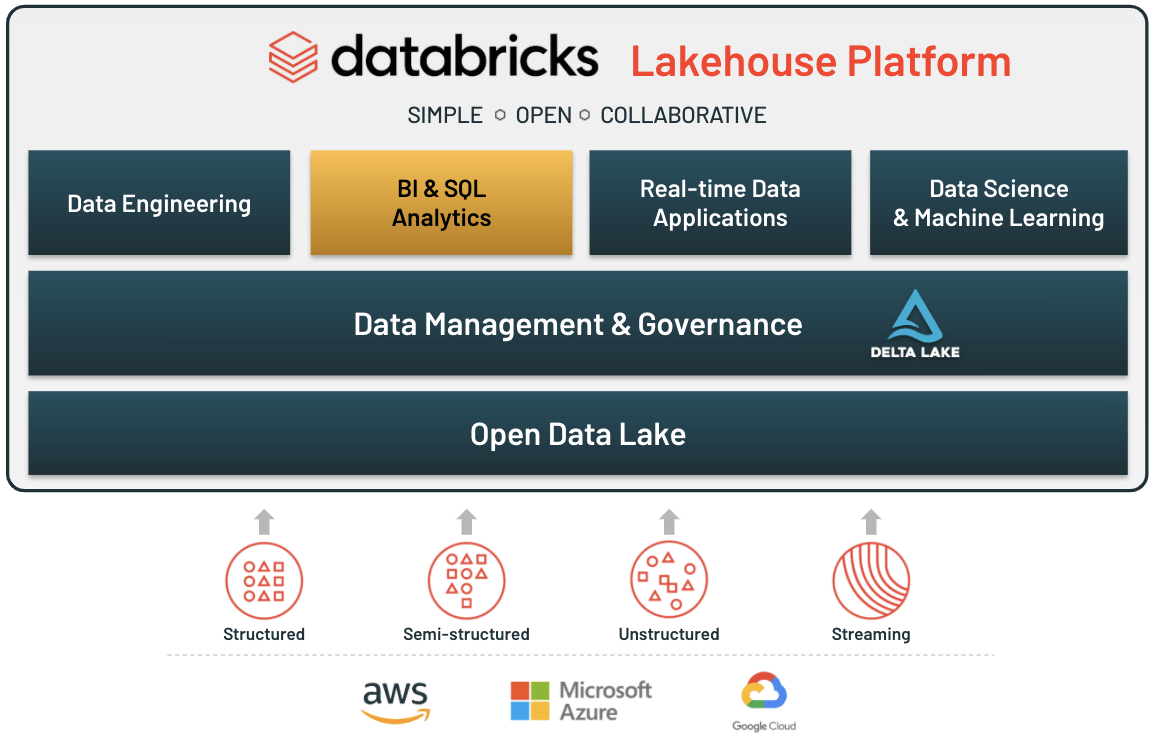

Databricks is a unified analytics platform that is gaining prominence in the realm of data science and engineering. Designed to streamline data workflows and enhance collaboration among teams, Databricks leverages the power of big data processing and machine learning, offering organizations a robust environment for their analytics and data science initiatives. At its core, Databricks integrates closely with Apache Spark, enabling high-performance computing, data processing, and advanced analytical capabilities.

In the modern data landscape, the significance of Databricks cannot be overstated. It serves as a bridge between data engineers, data scientists, and business analysts, allowing for a seamless flow of information and insights across various stages of data processing. Its support for collaborative notebooks encourages teamwork and fosters a culture of shared learning, where professionals can build on each other’s work, share insights, and iterate quickly. This collaborative approach empowers teams to translate complex data analyses into actionable business outcomes.

One of the key components that make Databricks a powerful tool is its compatibility with data lakes, which are foundational for storing vast amounts of structured and unstructured data. By providing a single platform for data ingestion, storage, and processing, Databricks eliminates the traditional silos that hinder data accessibility and utilization. Industries such as finance, healthcare, and e-commerce are increasingly adopting Databricks to enhance their data-driven strategies. For instance, numerous organizations report achieving a 50% reduction in time spent on data preparation and analysis, demonstrating the platform’s efficiency.

Overall, Databricks represents a vital advancement in data analytics, providing organizations with the tools necessary to navigate and capitalize on their data. By fostering collaboration, simplifying data workflows, and enhancing the capabilities of data processing and machine learning, it is transforming how businesses approach analytics in the contemporary landscape.

Setting Up Your Databricks Environment

To begin utilizing Databricks effectively, one must first establish a Databricks workspace. This section will provide precise steps for creating an account and launching a new workspace, ensuring a seamless experience in managing data, applications, and collaborative efforts.

First, visit the Databricks website and click on the “Get Started” button. You will need to create an account by providing basic information like your email address and setting a password. After registration, confirm your email to activate your account. Once your account is activated, log in to the Databricks platform.

Next, you will be prompted to create a new workspace. Choose a suitable name and region for your workspace to optimize performance, as these options can affect latency and data accessibility. After completing these selections, click on the “Create Workspace” button. The process may take a few moments as the platform provisions the necessary resources.

Upon the workspace being created, the next step involves configuring your cluster settings. Navigate to the “Clusters” section and click “Create Cluster.” Here, you will need to define settings such as cluster name, version, and type of workload. It is advisable to select the Standard mode for most applications. For optimal performance, consider specifying a higher instance type or scaling the number of workers depending on your workload requirements.

After you configure the cluster, it is essential to import any existing data you plan to analyze. You can do this by navigating to the “Data” section and selecting “Add Data.” Databricks supports various data sources, including cloud storage systems like AWS S3 and Azure Blob Storage. Follow the necessary steps to connect to your data source and import the files required for your project. Ensuring that you have properly established security measures and user access controls will enhance the collaborative aspect of your workspace.

Building Your First Data Pipeline

Constructing a data pipeline using Databricks involves several methodical steps that facilitate the ingestion, transformation, and analysis of data. First, ensure that you have access to the Databricks environment, where you can create and manage notebooks. Once logged in, you can initiate the process by creating a new notebook, which serves as the central interface for developing your code.

The first phase of building a data pipeline is data ingestion. Databricks supports various data sources, including cloud storage solutions like AWS S3, Azure Blob Storage, and even traditional databases. To begin, you can utilize the built-in connectors to import your data. For example, if you are pulling data from a CSV file located in S3, you can use Spark’s DataFrame API, specifically with the command: spark.read.csv("s3://your-bucket/your-file.csv"), to load the data.

Once the data is ingested, the next step is transformation, a critical process often referred to as ETL (Extract, Transform, Load). Databricks allows you to apply a myriad of transformations using its extensive suite of libraries. You can filter, group, and aggregate data within your notebook. For instance, if you wanted to calculate the average of a specific metric, you can easily achieve this with dataframe.groupBy("column").avg("metric"), enhancing the clarity and usability of your dataset.

Analysis comes next. Utilizing Databricks’ collaborative features, you can create visualizations or run machine learning models directly within your notebook. By leveraging built-in functions, your code becomes more efficient and easier to interpret. It is recommended to regularly document your code within the notebook, promoting better understanding and maintenance of your data pipeline.

In conclusion, building your first data pipeline in Databricks encapsulates a structured approach involving data ingestion, transformation, and analysis. By mastering these steps, you can efficiently manipulate and derive insights from your data, paving the way for more sophisticated projects in the future.

Conclusion and Key Takeaways

Throughout this guide, we have explored the capabilities of Databricks and its vital role in enhancing data analytics and machine learning workflows. As a unified platform, Databricks allows data professionals to seamlessly collaborate while leveraging Apache Spark’s processing power. The importance of this platform cannot be overstated, as it significantly simplifies the complexities often associated with big data analysis and machine learning projects.

Key takeaways from this discussion include the integration of Databricks into existing data pipelines, which can streamline operations and improve efficiency. Additionally, we highlighted essential tips for success when implementing Databricks: ensure robust data governance, explore the various workspace features, and take full advantage of built-in machine learning tools. While the platform is powerful, it also comes with potential pitfalls that users should be mindful of, such as improper configuration and data security concerns.

Furthermore, we encourage readers to engage in continuous learning concerning Databricks. Resources such as webinars, comprehensive documentation, and vibrant community forums provide valuable insights that can help users stay updated with the latest developments. Delving into advanced features like Delta Lake and MLflow can unlock even greater potential, further enhancing your organization’s data solutions.

In conclusion, adopting Databricks within your organization can lead to transformative changes in how you handle data and derive insights. By adhering to best practices and continuously seeking knowledge, users can fully harness the power of this platform. Therefore, we encourage you to explore Databricks’ functionality, broaden your skills, and ultimately gain a competitive edge in the evolving landscape of data analytics and machine learning.